Slim and unpolluted images are something you as a developer should strive to achieve. When developing locally, this might not be that obvious as your workstation resources are in a sense “free” to use. This is different on web-hosting services such as Heroku or AWS and it might be your clients’ requirement to drive the cost down as much as possible. Moreover, smaller images benefit security and increase safety by reducing the attack surface.

There are many articles further expanding on that subject. Go ahead and read them carefully if you want to find out about the other benefits of optimizing Docker images. For now make sure you have a solid grasp of Docker basics, as well as your terminal and favourite code editor open.

Scope, prerequisites, and initial setup of Docker

In this example, you’ll containerize Django app that exposes simple API for listing/retrieving information about cities in England. For that, you’ll use awesome django-rest-framework alongside with django-cities, as well as the PostGIS database. During this tutorial, you’ll adjust your Dockerfile to optimize your build and create a slim and compact ready-to-deploy image.

Since the proper setup of django-cities can be a bit painful, code written for this tutorial might be used as a point of reference. However, I won’t dive into specific django-cities settings to keep this guide Docker-oriented. Remember to make sure Docker you have installed is 17.05 or higher! In older versions, multi-stage builds are not supported.

Navigate to your usual workspace folder and create a new project directory.

$ cd ~/workspace

$ mkdir cities-backend && cd cities-backend

If everything worked out, you should now be in the cities-backend folder.

Clone this repo to your workspace dir:

$ git clone https://github.com/sleeske/docker-multi-stage-example.git .

Once cloning is completed, run the following command to build and run it:

$ docker-compose up --build

This might take a couple of minutes (especially if your internet connection is slow) so go ahead and make yourself a cup of your favourite beverage. Or stay with me and have a look at Dockerfile located in ./compose/django directory.

How Docker works - Dockerfile explained

The first line defines a build-time variable and sets its value to image version you’re going to use throughout this tutorial.

ARG PYTHON_VERSION=3.7.0-alpine3.8

You’ll be using Alpine Linux as Alpine-based images as they are much leaner than e.g. their Ubuntu-based counterparts. If you want to know more about Alpine Linux, you’ll learn a lot from their website - I recommend checking it out.

FROM python:${PYTHON_VERSION}

In this line, you need to define your base image that will be further extended. During the build process, ${PYTHON_VERSION} will be replaced by variable you defined two lines above.

ENV PYTHONUNBUFFERED 1

ENV command will set a given variable name to a specified value inside a running container. If you want to know the meaning of PYTHONUNBUFFERED 1, check out Python’s official documentation.

RUN apk add --no-cache \

--upgrade \

--repository http://dl-cdn.alpinelinux.org/alpine/edge/main \

alpine-sdk \

postgresql-dev \

postgresql-client \

libpq \

gettext \

&& apk add --no-cache \

--upgrade \

--repository http://dl-cdn.alpinelinux.org/alpine/edge/testing \

geos \

proj4 \

gdal \

&& ln -s /usr/lib/libproj.so.13 /usr/lib/libproj.so \

&& ln -s /usr/lib/libgdal.so.20 /usr/lib/libgdal.so \

&& ln -s /usr/lib/libgeos_c.so.1 /usr/lib/libgeos_c.so

It’s a good practice to keep your layer count as low as possible. To prevent Docker from creating an excessive amount of them by writing multiple RUN statements, use ‘&&’ to chain commands together and thus create only one new layer.

apk add command is Alpine’s equivalent of Debian/Ubuntu apt/apt-get install with slightly different syntax. Instead of executing apt update beforehand, you can call apk add with --no-cache flag. The --repository flag will set a source URL for your packages. Once everything is installed, the last three commands create symbolic links for each of GeoDjango-required packages.

COPY ./requirements /tmp/requirements

RUN pip install -U pip \

&& pip install -r /tmp/requirements/dev.txt

Those two lines are pretty self-explanatory. From your local machine content of ./requirements/ directory is copied to /tmp/requirements/. Afterwards, docker executes ‘pip install’ inside a new image layer. Aforementioned command upgrades pip itself and then installs all Python dependencies defined in copied dev.txt file.

WORKDIR /code

COPY ./src /code

COPY ./entrypoint.sh /

ENTRYPOINT [ "/entrypoint.sh" ]

Almost done. In the last four steps, Docker sets the current working directory to /code and copies the content of your local ./src folder to it. Then it copies the entrypoint.sh file to the root of your image and sets it as entrypoint.

And that’s all!

Running migrations inside the Docker container

At this point, everything should be up and running. In new terminal tab type:

$ docker ps

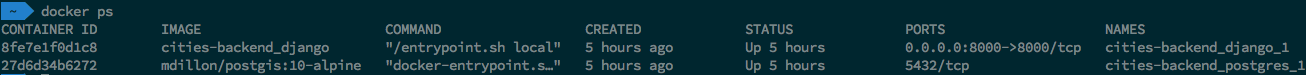

And you should see something similar to this:

A result of executing docker ps

To have everything done and dusted you still have to apply migrations, download data to populate django-cities rows in the database, and optionally create a superuser. You can do it in the following way:

$ docker exec cities-backend_django_1 python manage.py bootstrap$ docker exec cities-backend_django_1 python manage.py createsuperuser

Great! Now head to either 127.0.0.1:8000/swagger/ or 127.0.0.1:8000/redoc/ and explore the API. it if you feel adventurous, modify/extend it to your liking.

When you’re done, type the following command into the console:

$ docker images

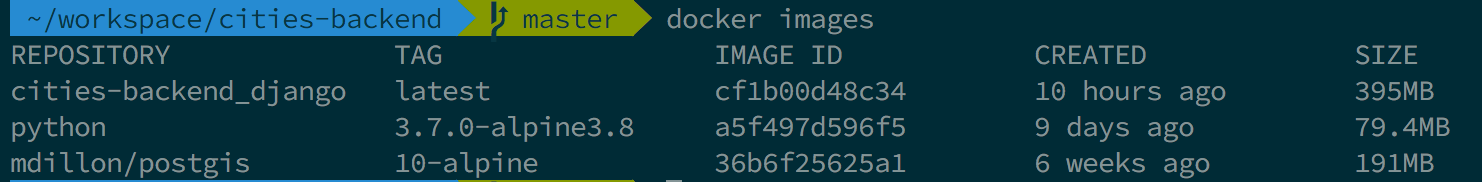

The output should look more or less like this:

A result of executing docker images

Starting from the bottom you have your database image. Next, there is alpine-based python image used as the base in your Dockerfile. It weighs under 80MB which is more than 11X less than Ubuntu-based one (around 920MB). That’s already a huge difference. On top, there is your custom image with a total size of less than 400MB. That’s twice as good as Ubuntu, but can it be even better?

Managing Docker dependencies

Let’s have a look at the installed Alpine packages. Are all of them necessary? In fact - they are not. So which one should you keep? Geos, PROJ4, and GDAL make the obvious choice as they’re required by GeoDjango to work properly. So does gettext (used for translations) and libpq (Postgres API written in C). Postgresql-client might seem like a good candidate for removal as only pg_isready is being used to test whether the database can accept connections. Total claimed space will be around 5MB, so in the grand scheme of things, it won’t make that much of a difference. However, alternative and lightweight solutions are available.

Next one in line is postgresql-dev. This package is required only to properly set up psycopg2. Once it’s done it becomes needless, so you can definitely get rid of that. Last but not least - alpine-sdk. This is a meta-package which means its sole purpose is to group other packages into a set of dependencies that get installed when apk add alpine-sdk is executed. Those dependencies include essential build tools like gcc, make, binutils, libc-dev, zlib, or musl-dev. A very handy thing, but as with postgresql-dev, you only need it during the build process.

Now you only need wheels. Specifically - Python wheels. I won’t go into details as this subject is immense, however, I would recommend starting from this article or these docs to get a good understanding of the concept. To keep it concise - wheels are the build-packages for Python that contain install files in a format that is very close to on-disk. Some packages are already shipped as wheels (e.g. Django) and some, like SQLAlchemy, are not. However, for the latter, you can still generate .whl package locally for your specific environment.

With all that knowledge, let’s start tweaking your Dockerfile. During the build you will create two images - one of them will be an intermediary builder and the other one the final image that you’ll actually run. The former of those two will mimic environment on the latter but will also contain all build tools required to generate wheel packages. Then, in the final image you are going to install your Alpine run-time dependencies from the remote repository and your Python dependencies from wheels generated in the aforementioned intermediary image.

Optimizing Dockerfile

ARG PYTHON_VERSION=3.7.0-alpine3.8

FROM python:${PYTHON_VERSION} as builder

ENV PYTHONUNBUFFERED 1

The first couple of lines stay pretty much unchanged, a custom name for your build stage is the only difference. This trick is purely cosmetical though and its sole reason is to increase verbosity.

RUN apk add --no-cache \

--upgrade \

--repository http://dl-cdn.alpinelinux.org/alpine/edge/main \

alpine-sdk \

postgresql-dev

In this step, your build-time dependencies are being installed.

WORKDIR /wheels

COPY ./requirements/ /wheels/requirements/

RUN pip install -U pip \

&& pip wheel -r ./requirements/dev.txt

Although this might look similar, take a closer look at the last line. Instead of executing the pip install, pip wheel is called. This command either collects or creates wheels out of downloaded packages and saves them in current workdir. Let’s move on to the final image.

FROM python:${PYTHON_VERSION}

ENV PYTHONUNBUFFERED=1

RUN apk add --no-cache \

--upgrade \

--repository http://dl-cdn.alpinelinux.org/alpine/edge/main \

gettext \

libpq \

postgresql-client \

&& apk add --no-cache \

--upgrade \

--repository http://dl-cdn.alpinelinux.org/alpine/edge/testing \

geos=3.6.2-r0 \

proj4=5.0.1-r0 \

gdal=2.3.1-r0 \

&& ln -s /usr/lib/libproj.so.13 /usr/lib/libproj.so \

&& ln -s /usr/lib/libgdal.so.20 /usr/lib/libgdal.so \

&& ln -s /usr/lib/libgeos_c.so.1 /usr/lib/libgeos_c.so \

&& rm -rf /var/cache/apk/*

Keep in mind that you’re still in the same Dockerfile. Commands look pretty similar to those executed in your builder image, except that only your run-time dependencies are being installed and contents of /var/cache/apk is removed recursively at the end. This extra operation should save you a couple more MB.

COPY --from=builder /wheels /wheels

RUN pip install -U pip \

&& pip install -r /wheels/requirements/dev.txt \

-f /wheels \

&& rm -rf /wheels \

&& rm -rf /root/.cache/pip/*

This is where the magic of multi-stage builds kicks in. Contents of /wheels is copied from builder image by specifying --from param. Then pip install with additional -f flag is being run. This will force pip to use .whl archives in the specified directory. After that, both /wheels and /root/.cache/pip folders are removed, as you don’t need them anymore. Final steps stay the same.

And by the way, full Dockerfile is available here - make sure to check it out and compare it with your local one if any issues arose.

Let's build your image and see if there is any improvement to the image size.

$ docker-compose build

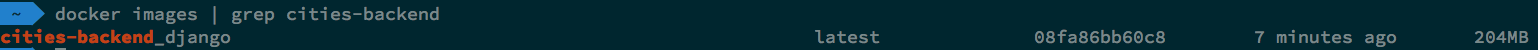

$ docker images | grep cities-backend

A result of executing docker images | grep cities-backend

As you can see, you’ve managed to trim another half of your image to a total size of around 200MB, which is a phenomenal improvement. And you did that without being extremely strict - e.g. you kept postgresql-client and installed Python development dependencies, so there is still some space to be gained in production-ready images.

One should keep in mind that multi-stage builds are not flawless solutions. They have their own cons, such as elongated build times (especially initial ones) as more layers get invalidated when dependencies change. This is a subject of many debates on the internet, and, as always, I highly recommend to read them through.

Navigate the changing IT landscape

Some highlighted content that we want to draw attention to to link to our other resources. It usually contains a link .

.svg)

.webp)

.svg)

.svg)

.avif)

.avif)

.avif)

.avif)