User experience is everything in software modernization. After all, if your system is in decline, it’s the users who suffer – and their dissatisfaction has an impact on your organization’s wellbeing. Knowing that you might be asking yourself the following question: How do I ensure the maximum usability of my software regardless of the passing time? Not so fast, though. Before we move on to the solutions, you need to understand that the key to effective treatment is the correct diagnosis.

You might have heard about people who underwent some serious medical procedures, but it didn’t help – all because there wasn’t a perfect match between the treatment and the condition. Similar is the case with software modernization: if you wish to improve the feel of your digital product, you must first identify its users’ pain points and diagnose UX problems.

UX research process

To gather data and produce insights useful in diagnosing UX problems, you should resort to UX research. Does the name ring the startup bell? It might, as the user experience research is often conducted by the early-stage founders who care about delivering an impactful product that solves actual user problems. However, this doesn’t mean that UX research can’t support the development of more mature products like yours.

The broad category of user experience research encompasses a variety of methods and tools, which are suitable for different stages of the project work. To prove our point, let us refer to the Nielsen Norman Group’s infographics showcasing UX activities in an exemplary product design cycle:

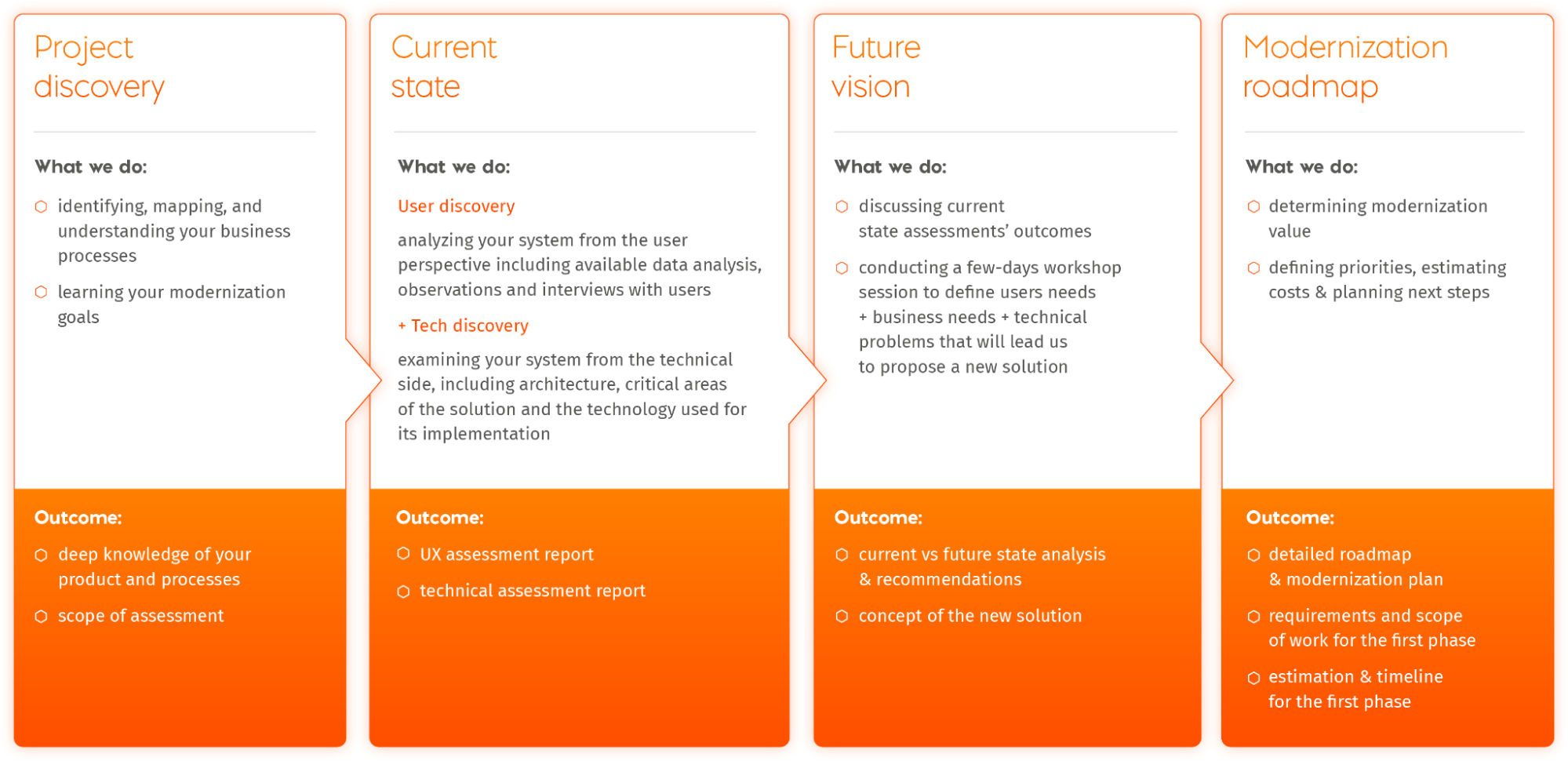

As software modernization is all about improving the already existing system, we propose that the UX analysis workflow looks as follows:

- Understanding the business process and needs – we start by concentrating on the big picture and understanding the product. At this point, we conduct stakeholders and competitive analyses, as well as discuss requirements. All that leads to defining the business goals that the UX modernization should help us achieve and creating a high-level roadmap.

- Detailed system analysis – after the preliminary exam, we subject our software patient to a thorough check-up. Focusing on the user perspective, we select UX research tools and methods that fit the modernization project best (you’ll read more about them in the following section). From the technical perspective, which also helps us spot UX-related issues, we review architecture, infrastructure, integrations, QA and deployment practices, and run a tech audit.

- New solution modeling – drawing on the data gathered so far, we utilize tools like user personas, story mapping, or design-level event storming to plan the next steps on the road to improved user experience.

UX analysis in modernization projects

When choosing a UX research toolkit, you need to consider several factors, including the objectives, data, and subjects (aka users). Considering the abundance of methodologies, we’ve decided to list the ones best-suited for diagnosing UX problems in software modernization and divide them into three categories: business-oriented, quantitative, and qualitative analysis. Let’s get the check-up started!

Business-oriented analysis

When diagnosing UX problems of your legacy product, it might be tempting to focus on the end-users right away. It’s not necessarily a bad strategy. Nonetheless, starting with preparatory business-oriented analysis will give you an invaluable insight into the context, limitations, and opportunities that influence the user experience of your software daily.

Stakeholders mapping and analysis

Stakeholders are all individuals who have an interest in the modernization project, whether they’re internal or external to your organization. These can be the board members, the C-level executives, the managers, and the users. All of these people are affected by – and can affect – your modernization endeavor, which is why stakeholder mapping is key to successful legacy UX improvement.

Stakeholder mapping boils down to identifying who your stakeholders are, how they’re related to the outdated software, what motivates them, and what expectations they hold regarding the project. Knowing that allows us to formulate a reasonable UX research plan and make more informed modernization decisions – not to mention that it minimizes the risk of coming across the unexpected roadblocks mid-process.

One way to map stakeholders is through a power-interest matrix, whereby each quadrant calls for a different stakeholder-management strategy. To learn more about this tool, we recommend you read Nielsen Norman Group’s article on Stakeholder Analysis for UX Projects.

Risks and opportunities assessment

As a manager or a C-level executive, you’re most likely familiar with SWOT analysis. A similar assessment can prepare you for undertaking UX modernization. Delving into the context of your software, e.g., using the Context Canvas®, you uncover the user experience-related risks and opportunities that’ll guide your modernization roadmap.

Functional and non-functional requirements analysis

Last but not least comes the product-oriented perspective. At this stage, we look at functional and non-functional requirements – or what the system does and how it works. This part of the business-oriented analysis can inform us about the implemented solutions, the legal constraints, or any other agreements in force. In other words, the requirements analysis allows us to set boundaries for UX modernization and ensure our improvement plans are viable.

Quantitative usability analysis

Second on our list are quantitative UX research methodologies, which provide hard numerical data on the users’ performance and the perceived usability of a digital product. The quantitative analysis gives us insight into how users interact with the software and which UX problems they face most frequently. It usually involves a greater number of users than the qualitative analysis, whereas its summary tends to contain data about the statistical significance of the results.

Data analysis

One way to gather quantitative insights on usability is through user analytics. This way, you get to capture “what the end users actually do with your product, which can be quite different from what they say”.

The toolkit depends on the type of software in question; however, Google Analytics is the go-to tool in many cases. With dozens of standard and custom reports, Google Analytics can show you all sorts of UX problems that need to be addressed, such as confusing navigation which manifests itself in users swaying back and forth between pages or inadequate content design causing users to bounce. Quantitative usability data can be also obtained with the help of Business Intelligence tools and internal application data – the sky is truly the limit when it comes to the toolkit at this stage of usability analysis.

Surveys

Another quantitative research method is conducting surveys. Regardless of the format of your choice (paper or online) or length, surveys reveal users’ opinions and can be used to back up your qualitative research findings on a larger scale. This may prove invaluable for gaining internal stakeholder buy-in for modernization.

Some of the key things to remember when creating usability-oriented surveys are to:

- use unbiased questions,

- ask one question at a time,

- use balanced rating scales,

- let the surveyed users share their thoughts in open-ended questions.

Qualitative usability analysis

While the quantitative analysis says “what”, qualitative research provides us with insights on the “why” and “how” in the process of diagnosing UX problems. Relying primarily on user research – but also on usability audits – it delivers “observational findings that identify design features easy or hard to use”. Bearing in mind that, and the fact that a qualitative study of 5 users is likely to uncover 85% of the usability problems, it’s safe to say that qualitative analysis is suited for informing redesign decisions relatively quickly and cheaply.

Individual in-depth interviews (IDIs)

Our starting point in the qualitative category are individual interviews. Engaging relevant modernization stakeholders in a conversation in a more intimate setting, IDIs give us insight into their concerns, problems, and attitude. As this research method allows users to elaborate on their opinions, it makes a great addition to observation – which we’re going to discuss in a moment.

The first group to be interviewed in the UX modernization process are the internal stakeholders. Although we’ve already indicated that in the section above, let us state once again that the success of the legacy system redesign depends largely on stakeholder involvement. During stakeholder IDIs, one gets to learn about the business goals and technical limitations that need to be considered during the future state modeling stage. These insights are precious if you’re about to bond with a modernization tech partner who needs to have a profound understanding of your organization’s goals and the way your legacy system functions. Stakeholders IDIs also provide an opportunity to uncover the user pain points reported directly to the internal stakeholders, e.g., by the support department.

The people that typically pop into our minds when we think of IDIs, though, are the end users. If the modernization candidate is an enterprise application, interviews will be conducted with your organization’s employees. Interacting with the system daily, they are an ideal source of knowledge on how the outdated solution influences their performance, productivity, and morale. For example, the in-depth interview can reveal that the employees find the current user flow perplexing or that the interface makes it difficult to access certain elements of the system, especially for the newly onboarded people.

On the other hand, if we’re about to modernize a commercial app or a client-facing component of the system, the interviews will understandably focus on the customers. While there is no universal set of questions to ask, the most important ones in the context of revamping the legacy user experience include:

- How would you assess the usability of the app on a scale from 1 to 10, where 1 means “very difficult to use” and 10 means “very easy to use”? Please, justify your choice.

- What bothers you in the app? What’s most problematic about using the app?

- If we could make [a limited number of] improvements, what would you like them to be?

Regardless of the type of end-users, though, it’s advisable also to interview at least one person who hasn’t used the app in question so far. Those accustomed to the solutions may brush off the legacy inconveniences, whereas the newcomers are like a breath of fresh air.

Usability tests

The thing about IDIs is that they inform you about opinions and feelings. For this reason, it’s best to pair them with usability evaluation, which addresses the interaction of the users with the software. By observing the target audience “in action”, the researchers conducting usability tests check whether the digital product is understood, intuitive to operate, and which parts of the system turn out to be most problematic.

Since observation is key here, usability tests provide us with unbiased data about our users and their pain points. How come? To quote Jim Ross, “the information people provide during interviews isn’t always accurate or reliable” because interviewees tend to think too much about what their answers will be used for. On the other hand, observing “participants’ natural behavior, without interrupting them or affecting their behavior” allows one to truly understand what’s behind their organic interactions with a given app.

As easy as it may sound, observation calls for preparation to inform the UX modernization process. Before kicking off this type of user research, it’s crucial to:

- define research goals and decide whether the observational study needs to be structured or not (more on that below),

- select a representative sample of the user base,

- ensure an experienced UX designer to run the observation and report on the observation.

There are two more things to bear in mind when conducting user observation. One is minimizing the observer effect by establishing trust between the researcher and the user. The other is observing “not only what people are doing, by interpreting their body language and gestures, but also what people are not doing”. This can indicate potential UX modernization candidates, e.g., messy information architecture causing users to leave out parts of the system or defective navigation having them impatiently move back and forth across the website.

Now that we’ve discussed observation in detail, let us introduce you to three common usability testing techniques.

- Moderated remote and lab tests

As was the case with other methodologies mentioned above, usability research encompasses a range of techniques, the first of which are moderated tests. This sort of testing involves a couple of steps. First, the researchers prepare usability testing scenarios focused on a given problem. Then, the UX experts introduce users to the tasks and observe how they approach them. Among the things researchers focus on at this stage are errors and user reactions. The last step is the summary, e.g. a report that singles out all flaws in the user flow.

Moderated tests can take place either in a usability lab, where the participants and the researchers are present in person or remotely over the internet or by phone. While the former gives greater control over the procedure and provides extra data points, such as body language, facial expressions, or even the possibility to gather insights from eye-tracking equipment, the latter is more affordable and easier to arrange. Depending on the location, different tools will be involved – but in most cases, researchers at least record the testing sessions.

- Unmoderated remote tests

On the other side of the supervision spectrum lie the unmoderated remote tests. As the name suggests, there is no script here and the user is supposed to explore the system on their own. An example of unmoderated tests may be that of session recordings. According to Hotjar, these are a great way to “spot major problems with a site's intended functionality, watch how people interact with its page elements such as menus and CTAs, and see places where they stumble, u-turn, or completely leave”.

- Guerilla tests

Finally, there are also guerilla tests or hallway usability tests. As they can be conducted pretty much everywhere, including your organization’s hallway, and they typically don’t exceed a quarter of an hour, guerilla tests are a budget-friendly way to uncover UX issues that haunt your system. Be careful, though, as such quick tests should never constitute the only basis for your modernization roadmap.

Usability audit & heuristic evaluation

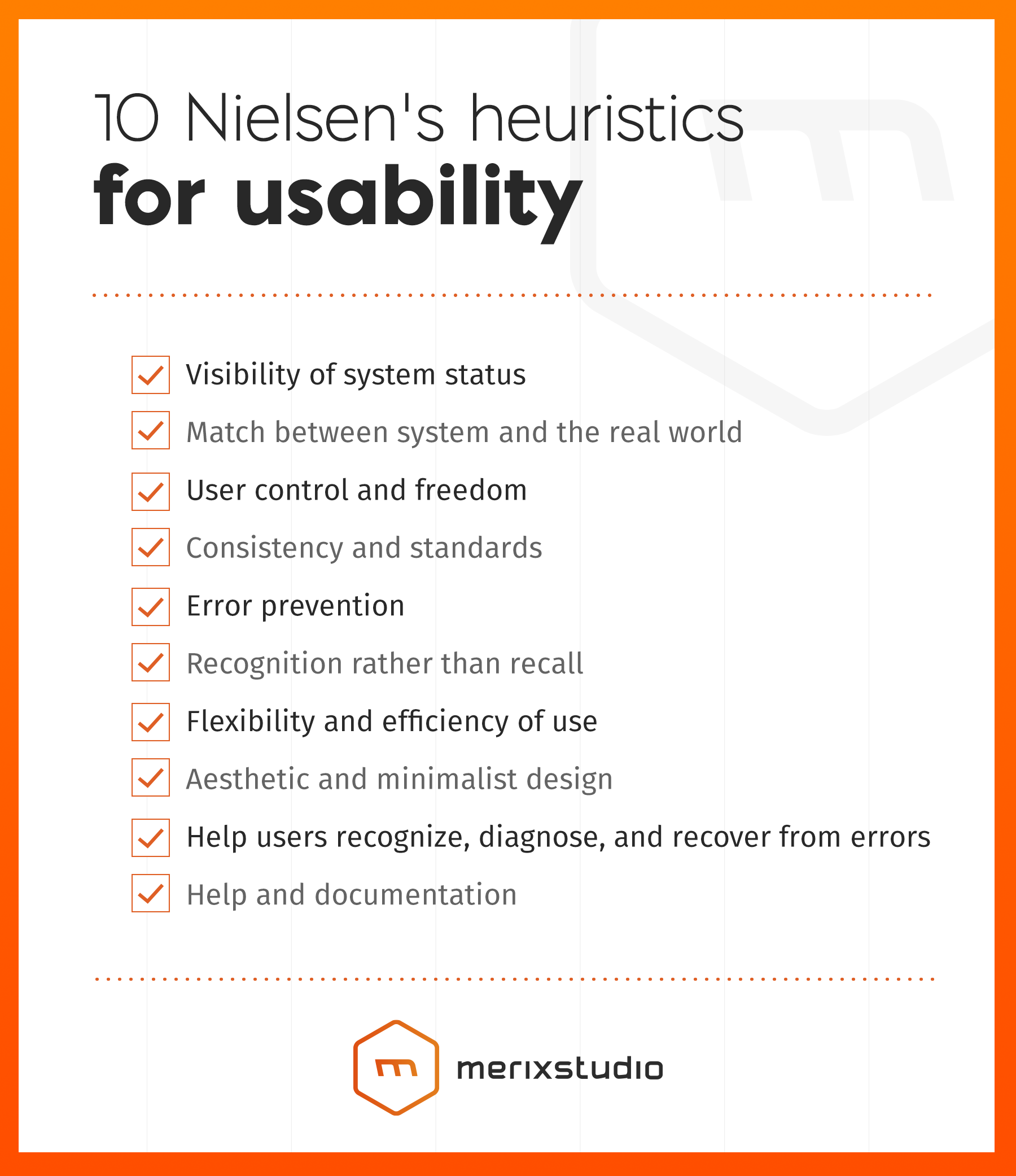

We’re closing the list of qualitative research methods with a technique that doesn’t call for user involvement but checks if the system suffers from UX-related illnesses: heuristic evaluation also referred to as the usability audit.

As defined by the Nielsen Norman Group, heuristic evaluation entails “having a small set of evaluators examine the interface and judge its compliance with recognized usability principles or the heuristics”. In simple terms, this technique is all about having experienced UX designers check your system against standards and best practices, which guarantee an impeccable user experience.

Throughout the usability audit, the UX specialists identify the issues and assess their severity, which helps draft a modernization roadmap. For instance, if there’s something wrong with a user flow or navigation, it’s most likely a critical problem that should be addressed first. If, on the other hand, there are buttons that could fit the UI better, they’re probably going to end up on the “minor” side of the spectrum.

Being more theoretical in nature, heuristic analysis forms a great starting point for further user research, during which the researcher can verify their hypotheses and gather invaluable user feedback.

Workshops

Now that we’ve discussed different UX analysis methodologies, we’d like to draw your attention to workshops, which are more of a way to do your research than a research subtype. The unique thing about workshops is that they gather all people who impact the success of the modernization endeavor, from internal stakeholders, through developers, to designers. As a result, they provide a stimulating collaborative environment – whose importance in the UX modernization process simply can’t be overstated.

Workshops are often the eye-opener about the system and the context around it. To quote one of your modernization clients, “the workshop has made me understand my application better than I have in the past 12 years from using it”. Not to mention that they simply expedite both the research and the future state modeling processes.

📙 Case study of a Business Insurance Management System modernization

If we’re dealing with a mature system, the pre-development phase is key to understanding its intricacies and diagnosing the legacy symptoms right. That’s why when the USA-based insurance agency approached us with the task of improving their application’s user experience, we knew that the workshop is the way to go. We invited the client to our office, gathered top-notch designers and developers, and together identified all areas for improvement. Thanks to close collaboration, we were able to propose UX enhancements that resulted in giving the system’s users more control over processes and increasing their productivity.

To read the full case study, click here.

Having stated these benefits, let’s look at some UX research methods that work well in the pre-development workshop environment.

User personas and user journey

The workshop’s value stems largely from the fact that they give researchers access to the know-how of the participants, who most often represent different facets of the project. This gives all participants an opportunity to open up, speak their minds, and get as many perspectives on the work as possible.

The knowledge gathered during the workshop can serve to create personas and user journeys informed by the designers’ research and real-life insights. To ensure that the workshop’s outcomes accurately reflect the users and the customer experience, it’s advisable to invite frontline employees to participate in the pre-development process.

Co-creation

As highly collaborative, workshops also facilitate co-creation, which Prof. Thorsten defines as “an active, creative and social process, based on collaboration between producers and users, that is initiated by the firm to generate value for customers”. In other words, co-creation empowers individuals – including the internal stakeholders, end users, IT specialists, and researchers – to generate ideas, brainstorm them, and develop usable solutions.

Diagnose UX problems first to facilitate treatment

Even though it doesn’t require creating a brand new digital product from scratch, or maybe precisely for this reason, software modernization is anything but a no-brainer. Its goal is to make the outdated system intuitive, clear, and usable again – which is an impossible task unless you do your research and adopt a user-centric approach.

Have you set your mind on UX modernization? Now's the time to get stakeholders on your side!

Navigate the changing IT landscape

Some highlighted content that we want to draw attention to to link to our other resources. It usually contains a link .

.svg)

.webp)

.svg)

.svg)